Using Reddit's API for Predicting Comments¶

What characteristics of a post on Reddit contribute most to what subreddit it belongs to?¶

In this project, we will practice two major skills.

- Collecting data via an API request.

- Building a binary predictor.

Your method for acquiring the data will be scraping threads from at least two subreddits.

Once you've got the data, you will build a classification model that, using Natural Language Processing and any other relevant features, predicts which subreddit a given post belongs to.

# Let's get the administrative stuff done first

# import all the libraries and set up the plotting

import requests

import json

import time

import pandas as pd

import numpy as np

from itertools import combinations

from sklearn.ensemble import BaggingClassifier, RandomForestClassifier, ExtraTreesClassifier, AdaBoostClassifier, GradientBoostingClassifier

from sklearn.feature_extraction import stop_words

from sklearn.feature_extraction.text import CountVectorizer, HashingVectorizer, TfidfVectorizer

from sklearn.linear_model import LogisticRegression, LogisticRegressionCV

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV

from sklearn.naive_bayes import MultinomialNB, BernoulliNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler, PolynomialFeatures

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use('seaborn')

sns.set(style="white", color_codes=True)

colors_palette = sns.color_palette("GnBu_d")

sns.set_palette(colors_palette)

# GnBu_d

colors = ['#37535e', '#3b748a', '#4095b5', '#52aec9', '#72bfc4', '#93d0bf']

Pre-work. Demonstrate scraping Thread Info from Reddit.com¶

Set up a request (using requests) to the URL below.¶

*NOTE*: Reddit will throw a [429 error](https://httpstatuses.com/429) when using the following code:

res = requests.get(URL)

This is because Reddit has throttled python's default user agent. You'll need to set a custom User-agent to get your request to work.

res = requests.get(URL, headers={'User-agent': 'YOUR NAME Bot 0.1'})

# For example:

pre_work = False

if pre_work:

url = "http://www.reddit.com/r/boardgames.json"

headers = headers={'User-agent': 'gbkgwyneth Bot 0.1'}

res = requests.get(url, headers=headers)

res.status_code

Use res.json() to convert the response into a dictionary format and set this to a variable.

data = res.json()

if pre_work:

data = res.json()

# Some initial exploring of the data

['data', 'kind']

sorted(data.keys())

['after', 'before', 'children', 'dist', 'modhash']

sorted(data['data'].keys())

df = pd.DataFrame(data['data']['children'])

df.head()

df.shape

[post['data']['name'] for post in data['data']['children']]

Getting more results¶

By default, Reddit will give you the top 25 posts:

print(len(data['data']['children']))

If you want more, you'll need to do two things:

- Get the name of the last post: data['data']['after']

- Use that name to hit the following url: http://www.reddit.com/r/boardgames.json?after=THE_AFTER_FROM_STEP_1`

- Create a loop to repeat steps 1 and 2 until you have a sufficient number of posts.

*NOTE*: Reddit will limit the number of requests per second you're allowed to make. When you create your loop, be sure to add the following after each iteration.

time.sleep(3) # sleeps 3 seconds before continuing

This will throttle your loop and keep you within Reddit's guidelines. You'll need to import the time library for this to work!

I. Predicting posts from the Cape Cod and Galveston sub-Reddits¶

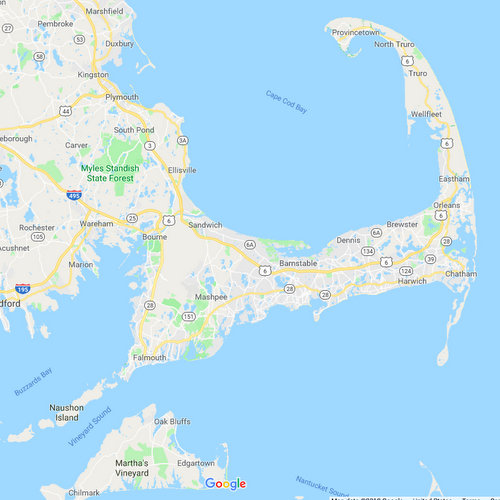

With no experience of Reddit, I had a challenge underatnding how it worked, what people discuss, and what types of "topics" would be interesting to predict. I settled on predicting posts from the r/CapeCod versus the r/galveston. If I were to start-over, I would definitely try to find more interesting topics, but I am not sure that I have yet spent enough time with Reddit to be able to pedict what those might be.

|  |

II. Gather the raw data from Reddit.¶

Save the scraped data as two CSV files or read previously scraped data from CSV files into two DataFrame.

# Function to scrape the data

def scrape_data(url,after):

headers = headers={'User-agent': 'gbkgwyneth Bot 0.1'}

posts = []

for _ in range(40):

if after == None:

params = {}

else:

params = {'after' : after}

res = requests.get(url, params=params, headers=headers)

if res.status_code == 200:

data = res.json()

posts.extend(data['data']['children'])

after = data['data']['after']

print(after)

else:

print("Problem with request!")

break

time.sleep(3)

return posts

# Do we want to scrape data or read from a csv file?

scrape = False

# Scrape posts following 'after' from the first URL

# Place them in a dataframe

# Export to a file

if scrape:

after = None # "t3_4kqn0c"

url_gv = "https://www.reddit.com/r/galveston.json"

scrape_gv = scrape_data(url_gv, after)

posts_gv = []

for i in range(len(scrape_gv)):

posts_gv.append(scrape_gv[i]['data'])

df_gv = pd.DataFrame(posts_gv)

df_gv.drop_duplicates(subset='title', inplace=True)

df_gv.to_csv(f'../data/reddit_gv.csv')

# Scrape posts following 'after' from the second URL

# Place them in a dataframe

# Export to a file

if scrape:

after = None # "t3_35fh6e"

url_cape = "https://www.reddit.com/r/CapeCod.json"

scrape_cape = scrape_data(url_cape,after)

posts_cape = []

for i in range(len(scrape_cape)):

posts_cape.append(scrape_cape[i]['data'])

df_cape = pd.DataFrame(posts_cape)

df_cape.drop_duplicates(subset='title', inplace=True)

# If not scraping, read from csv

if not scrape:

df_cape = pd.read_csv("../data/reddit_cape.csv")

df_gv = pd.read_csv("../data/reddit_gv.csv")

# Create the target vector

df_gv['is_cape'] = 0

df_cape['is_cape'] = 1

# Merge the dtafrmaes

df = df_gv.append(df_cape, sort=True)

df = df[['is_cape', 'title','id']]

df.set_index("id", inplace=True)

# Split the target vector from the dataframe

y = df['is_cape']

df.drop('is_cape', inplace=True, axis=1)

df.head()

# From https://stackoverflow.com/questions/16645799/how-to-create-a-word-cloud-from-a-corpus-in-python

from wordcloud import WordCloud, STOPWORDS

stopwords = set(STOPWORDS)

def show_wordcloud(data, title = None):

wordcloud = WordCloud(

background_color='white',

stopwords=stopwords,

max_words=200,

max_font_size=40,

scale=3,

random_state=1 # chosen at random by flipping a coin; it was heads

).generate(str(data))

fig = plt.figure(1, figsize=(12, 12))

plt.axis('off')

if title:

fig.suptitle(title, fontsize=20)

fig.subplots_adjust(top=2.3)

plt.imshow(wordcloud)

plt.show()

show_wordcloud(df_gv['title'])

show_wordcloud(df_cape['title'])

# Why Python and others

# df_gv[df_gv['title'].str.contains("Texas")]

# df_cape[df_cape['title'].str.contains("Texas")]

Train/test split¶

# Train/Test split

X_train, X_test, y_train, y_test = train_test_split(df, y, random_state=42, stratify=y)

Run a simple CountVectorizer and explore.

cv_simple = CountVectorizer(stop_words='english')

X_train_cv = cv_simple.fit_transform(X_train['title'])

cv_train = pd.DataFrame(X_train_cv.todense(), columns=cv_simple.get_feature_names())

Create a dataframe with counts of most common words¶

# Create a data frame of the most common words

n_words = 40

words = list(cv_train.sum().sort_values(ascending=False)[:n_words].index)

cv_train['is_cape'] = y_train.values

cv_train_small = cv_train.groupby('is_cape').sum()[words]

cv_train_small.head()

Plot the most common words¶

# Adapted from https://matplotlib.org/examples/api/barchart_demo.html

words_cape = words

words_count_cape = cv_train_small.loc[1]

words_gv = words

words_count_gv = cv_train_small.loc[0]

width = 0.35 # the width of the bars

ind = np.arange(n_words)

fig, ax = plt.subplots(figsize=(15, 10))

rects1 = ax.bar(ind, words_count_cape, width, color=colors[0])

rects2 = ax.bar(ind+width,words_count_gv, width, color=colors[5])

# add some text for labels, title and axes ticks

ax.set_ylabel('Counts')

ax.set_title('Counts by word and reddit')

ax.set_xticks(ind + width / 2)

ax.set_xticklabels(words,rotation='vertical')

ax.set_ylim(0,80)

ax.legend((rects1[0], rects2[0]), ('Cape Cod', 'Galveston'))

plt.show()

The most common words are not very surprising - place names.

Since the beach in Galveston faces southeast, 'sunset' is a Cape Cod term. And Galveston has floating casinos, thus 'gaming'.

Run a simple TfidfVectorizer and explore.

tv_simple = TfidfVectorizer(stop_words='english')

X_train_tv = tv_simple.fit_transform(X_train['title'])

tv_train = pd.DataFrame(X_train_tv.todense(), columns=tv_simple.get_feature_names())

tv_train.head()

Create a dataframe with counts of most frequent words¶

# Create a data frame of the most common words

n_words = 20

words = list(tv_train.sum().sort_values(ascending=False)[:n_words].index)

tv_train['is_cape'] = y_train.values

tv_train_small = tv_train.groupby('is_cape').sum()[words]

tv_train_small.head()

Plot the most frequent words¶

# Adapted from https://matplotlib.org/examples/api/barchart_demo.html

words_cape = words

words_count_cape = tv_train_small.loc[1]

words_gv = words

words_count_gv = tv_train_small.loc[0]

width = 0.35 # the width of the bars

ind = np.arange(n_words)

fig, ax = plt.subplots(figsize=(15, 5))

rects1 = ax.barh(ind, words_count_cape, width, color=colors[0])

rects2 = ax.barh(ind+width,words_count_gv, width, color=colors[5])

# add some text for labels, title and axes ticks

ax.set_xlabel('TF-IDF value')

ax.set_title('TF-IDF by word and reddit')

ax.set_yticks(ind + width / 2)

ax.set_yticklabels(words) #,rotation='vertical')

ax.set_xlim(0,20)

ax.legend((rects1[0], rects2[0]), ('Cape Cod', 'Galveston'))

plt.show()

From looking at the most frequent words, I think that without place names, it will be difficult to differentiate between the two sets of posts. But we'll give it the good old college try.

IV. Natural Language Processing (NLP)¶

Use CountVectorizer or TfidfVectorizer from scikit-learn to create features from the thread titles and descriptions (NOTE: Not all threads have a description).

- Examine using count or binary features in the model

- Re-evaluate your models using these. Does this improve the model performance?

- What text features are the most valuable?

As a first step to get a baseline, use a simple CountVectorizer and model with with regression.

# Basic CountVectorizer and LogisticRegression to get a simple first model

cv_simple = CountVectorizer()

X_train_cv = cv_simple.fit_transform(X_train['title'])

X_test_cv = cv_simple.transform(X_test['title'])

print("There are {} features in the model.".format(len(cv_simple.get_feature_names())))

lr_simple = LogisticRegressionCV(cv=3)

lr_simple.fit(X_train_cv, y_train)

score_train = lr_simple.score(X_train_cv, y_train)

score_test = lr_simple.score(X_test_cv, y_test)

# There are 3440 features in the model.

# Train score: 0.9695740365111561 Test score 0.8157894736842105

print("Train score: {} Test score {}".format(score_train, score_test))

The model seems to be quite overfit. If I could, I would gather more data.

# What are the words that best predict the target based on the coefficients

coef_names = cv_simple.get_feature_names()

coef_df = pd.DataFrame ({

'coefs' : coef_names,

'vals' : lr_simple.coef_[0]

}).set_index('coefs')

coef_df.reindex(coef_df['vals'].abs().sort_values(ascending=False).index)[:20].T

- The model appears to be overfit since the "training score" is much highter than the "testing score". Again, the words with the largest coefficients are mostly unsurprising.

- Galveston is an island and has a seawall.

- I do wonder why "water" has such a large coefficient.

- The word "hole" makes me think of "Woods hole", so I'll try bi-grams as well.

Second step: add in bi-grams, use a simple CountVectorizer, and model with with regression.

cv_gram = CountVectorizer(ngram_range=(1,2))

X_train_cvg = cv_gram.fit_transform(X_train['title'])

X_test_cvg = cv_gram.transform(X_test['title'])

print("There are {} features in the model.".format(len(cv_gram.get_feature_names())))

lr_simple = LogisticRegressionCV(cv=3)

lr_simple.fit(X_train_cvg, y_train)

score_train = lr_simple.score(X_train_cvg, y_train)

score_test = lr_simple.score(X_test_cvg, y_test)

# There are 11975 features in the model.

# Train score: 0.9959432048681541 Test score 0.8218623481781376

print("Train score: {} Test score {}".format(score_train, score_test))

The training score is much higher, but the test score is not much higher... And the mode is still overfit.

What is the baseline accuracy for this model?¶

print("The baseline accuracy for this model is {:.2f}%.".format(

cross_val_score(lr_simple, X_train_cvg, y_train).mean()*100))

Run a CountVectorizer and regression adding in a pipeline and grid search. Also, eliminate the stop words.

# Create the pipeline

pipe_cv = Pipeline([

('cv', CountVectorizer()),

('lr', LogisticRegression()),

])

params_grid_cv = {

'cv__stop_words' : [None, 'english'],

'cv__ngram_range' : [(1,1), (1,2)],

'cv__max_df' : [1.0, 0.95],

'cv__min_df' : [1, 2],

'cv__max_features' : [2000, 2250, 2500],

'lr__C' : [1, .05],

'lr__penalty' : ['l1', 'l2']

}

# Grid Search!

gs_cv = GridSearchCV(pipe_cv, param_grid=params_grid_cv, verbose=1)

gs_cv.fit(X_train['title'], y_train)

score_train = gs_cv.best_score_

score_test = gs_cv.score(X_test['title'], y_test)

params_train = gs_cv.best_params_

for k in params_grid_cv:

print("{}: {}".format(k,params_train[k]))

print("Train score: {} Test score {}".format(score_train, score_test))

# Fitting 3 folds for each of 192 candidates, totalling 576 fits

# cv__stop_words: english

# cv__ngram_range: (1, 2)

# cv__max_df: 1.0

# cv__min_df: 1

# cv__max_features: 2250

# lr__C: 1

# lr__penalty: l2

# Train score: 0.8079783637592968 Test score 0.8178137651821862

Take a look at the coefficients in the model to see which words best predict the target.¶

# What are the words that best predict the target?

coef_names = gs_cv.best_estimator_.named_steps['cv'].get_feature_names()

coef_vals = gs_cv.best_estimator_.named_steps['lr'].coef_[0]

coef_df = pd.DataFrame ({

'coefs' : coef_names,

'vals' : coef_vals

}).set_index('coefs')

coef_df.reindex(coef_df['vals'].abs().sort_values(ascending=False).index)[:20].T

Create a function to run grid search on anything we might want to investigate.¶

This could be generalized further, I'm sure, but for now it is enough.

def create_pipline(items, use_params, X_train, X_test, y_train, y_test):

# Add a pipe, add a param !

pipe_items = {

'cv': CountVectorizer(),

'tv': TfidfVectorizer(),

'hv': HashingVectorizer(),

'ss' : StandardScaler(),

'pf' : PolynomialFeatures(),

'lr' : LogisticRegression(),

'bnb' : BernoulliNB(),

'mnb' : MultinomialNB(),

'rf' : RandomForestClassifier(),

'gb' : GradientBoostingClassifier(),

'ab' : AdaBoostClassifier(),

'svc' : SVC(),

'knn' : KNeighborsClassifier()

}

# Include at least one param for each pipe item

param_items = {

'cv' : {

'cv__stop_words' : [None, 'english'],

'cv__ngram_range' : [(1,1), (1,2)],

'cv__max_df' : [1.0, 0.95],

'cv__min_df' : [1],

'cv__max_features' : [2000, 2250, 2500, 2750]

},

'tv' : {

'tv__stop_words' : [None, 'english'],

'tv__ngram_range' : [(1,1), (1,2)],

'tv__max_df' : [1.0, 0.95],

'tv__min_df' : [1, 2],

'tv__max_features' : [2000, 2250, 2500, 2750]

},

'hv' : {

'hv__stop_words' : [None, 'english'],

'hv__ngram_range' : [(1,1), (1,2)]

},

'ss' : {

'ss__with_mean' : [False]

},

'pf' : {

'pf__degree' : [2]

},

'lr' : {

'lr__C' : [1, .05],

'lr__penalty' : ['l2']

},

'bnb' : {

'bnb__alpha' : [1.0, 1.5, 1.8, 2.0]

},

'mnb' : {

'mnb__alpha' : [0.8, 1.0, 1.2]

},

'rf' : {

'rf__n_estimators' : [8, 10, 15]

},

'gb' : {

'gb__n_estimators' : [80, 100, 120]

},

'ab' : {

'ab__n_estimators' : [75, 50, 125]

},

'svc' : {

'svc__kernel' : ['linear','poly']

},

'knn' : {

'knn__n_neighbors' : [25,35,45]

}

}

# Create the parameters for GridSearch

params = dict()

if use_params:

for i in items:

for p in param_items[i]:

params[p] = param_items[i][p]

# Create the pipeline

pipe_list = [(i,pipe_items[i]) for i in items]

print("Using:")

for p in pipe_list:

print("\t" + str(p[1]).split('(')[0])

pipe = Pipeline(pipe_list)

# Grid search

gs = GridSearchCV(pipe, param_grid=params, verbose=1)

gs.fit(X_train, y_train)

# Print the results

train_params = gs.best_params_

train_score = gs.best_score_

y_test_hat = gs.predict(X_test)

test_score = gs.score(X_test, y_test)

for k in train_params:

print("{}: {}".format(k,train_params[k]))

print("Train score: {} Test score {}".format(train_score, test_score))

print("")

return train_score, test_score, y_test_hat, train_params

Choose some vectorizers and models to test.¶

- This runs a long time!

- TI ran this many times, updating the parameters to tune.

#Decide what to put into the pipline, grid searh, and save the "best" for each grid search

use_params = True

vects = ['cv','tv','hv']

models = ['lr','bnb', 'mnb','rf','gb','ab','svc','knn']

other = ['pf','ss']

# After some initial tests, these seem like the best to pursue further

vects = ['cv','tv']

models = ['lr','bnb', 'mnb']

other = []

model_solns = {}

idx = 0

for v in vects:

for i in range(len(other)+1):

for o in list(combinations(other, i)):

for m in models:

idx += 1

pipe_items = [v]

pipe_items.extend(list(o))

pipe_items.append(m)

[train_score, test_score, y_test_hat, best_params] = create_pipline(pipe_items, use_params,

X_train['title'], X_test['title'],

y_train, y_test)

model_solns[idx] = {'vectorizer' : v, 'model': m, 'features': list(o),

'train_score': train_score, 'test_score': test_score,

'best_params': best_params, 'y_test_hat' : y_test_hat}

Lots of results to examine. Unfortunately, none are exactly great. This is unsurprising given the two subreddits that I chose.

df_solns = pd.DataFrame(model_solns)

df_solns.sort_values(ascending=False, by='test_score',axis=1)

df_solns.loc[['vectorizer','model','train_score','test_score','best_params'],:]

Closely look at the parameters and see if we hit an boundary on our parameter options.¶

df_solns.loc[:,2]

# Let's look at the parameters of one solution

df_solns.loc['best_params',2]

Can get a better value by averaging our top models?¶

# Average the y_test_hat vectors to see if we can improve the score

y_test_hat_agg = sum(df_solns.loc['y_test_hat',:])

y_test_hat = np.round(y_test_hat_agg/df_solns.shape[1])

print("The accuracy score of the average test solutions is {:.2f}%.".format(accuracy_score(y_test,y_test_hat)*100))

This aggregated solution is actually a little better!

What are some of the "misses"?¶

# Not sure why I get warnings when adding these

X_test['y'] = y_test.values

X_test['y_hat'] = y_test_hat

X_test['y_hat_agg'] = y_test_hat_agg

Remember, where y=1, the post is from r/CapeCod.¶

X_test_incorrect = X_test[X_test["y"] != X_test['y_hat']]

X_test_incorrect.head(20)

With more data, I think some of these could be mode accurately predicted. For example:

- "Marstons Mills" is a town on Cape Cod.

- Fertitta is a chef in Galveston.

- Dog friendly restaurants?

- Batting cages???

Examine unigrams of the titles of incorrect predictions.¶

cv_simple = CountVectorizer(stop_words='english')

X_test_cv = cv_simple.fit_transform(X_test_incorrect['title'])

cv_test = pd.DataFrame(X_test_cv.todense(), columns=cv_simple.get_feature_names())

# Create a data frame of the most common words

n_words = 40

words = list(cv_test.sum().sort_values(ascending=False)[:n_words].index)

print(words)

cv_test['is_cape_test'] = X_test_incorrect['y'].values

cv_test_small = cv_test.groupby('is_cape_test').sum()[words]

columns = [c for c in cv_test_small.columns if c in cv_train.columns]

cv_train = cv_train[columns]

cv_train['is_cape'] = y_train.values

cv_train_small = cv_train.groupby('is_cape').sum()

columns = [c for c in cv_train.columns if c in cv_test_small.columns]

cv_test_small = cv_test_small[columns]

cv_merge = pd.concat([cv_train_small, cv_test_small],keys=['train', 'incorrect'], sort=True)

cv_merge.head()

# Adapted from https://matplotlib.org/examples/api/barchart_demo.html

words = cv_merge.columns

n_words = len(words)

words_count_cape_train = cv_merge.loc["train"].loc[0]

words_count_gv_train = cv_merge.loc["train"].loc[1]

words_count_cape_wrong = cv_merge.loc["incorrect"].loc[0]

words_count_gv_wrong = cv_merge.loc["incorrect"].loc[1]

width = 0.25 # the width of the bars

ind = np.arange(n_words)

fig, ax = plt.subplots(figsize=(15, 10))

rects0 = ax.bar(ind, words_count_cape_train, width, color=colors[0])

rects1 = ax.bar(ind+width, words_count_cape_wrong, width, color=colors[2])

rects2 = ax.bar(ind+2*width, words_count_gv_train, width, color=colors[4])

rects3 = ax.bar(ind+3*width, words_count_gv_wrong, width, color=colors[5])

# add some text for labels, title and axes ticks

ax.set_ylabel('Counts')

ax.set_title('Counts by word and reddit')

ax.set_xticks(ind + width / 2)

ax.set_xticklabels(words,rotation='vertical')

ax.set_ylim(0,80)

ax.legend((rects0, rects1, rects2, rects3), ('Cape Cod Train', 'Cape Cod Incorrect',

'Galveston', 'Gslveston Incorrect'));

This plot did not come out as well as I had hoped, but I think I can improve on it in the future.

The data¶

For this project, I chose two sub-reddits to compare, r/Cape Cod and r/galveston. As this was my first experience with Reddit, I had some challenege to come up with two forums to compare since most anything I chose did not have enough posts. With more experience with Reddit, I might have been able to chose more interesting topics to compare. Scraping was easy enough, but since the Reddits are not extremely active, I did not end up with as much data as I would have liked

Exploring the data¶

Once I had scraped the data, I ran a number of initial tests on it to get some insight. I used a word cloud to illustrate the word used most often in each subreddit. I then ran a vectorizer on unigrams and made some bar charts comparing the usage of the most words. The results were not particularly surprising, though there were some words I had not anticipated such as 'seawall' and 'gaming'.

Modeling the problem¶

From there, I dove into NLP. I ran a few simple tests, determined a baseline accuracy, and examined the coefficients determied by logistical regression. Again, the words with the most influence were not surprising - mostly place names or things unique to one place or the other. Actually, there was one word with a aurprisingly high coefficient - 'water'.

Evaluate the model¶

I then spent some time trying to generalize pipelines in order to efficiently run lots of different grid searches on many different models and parameters. This generalized function has room for improvement, but was quite helpful for me to stay organized in my tests. I saved the "best" from each test and turned the data into a dataframe in order to explore the incorrect predictions.

Despite tuning the parameters, I could not get better than 83% accuracy, unfortunately.